Before starting

Before starting, install Docker with Nvidia container toolkit:

Install Docker using the following commands:

curl -fsSL get.docker.com -o get-docker.sh

CHANNEL=stable sh get-docker.sh

rm get-docker.sh

Add the Nvidia Container Toolkit repository and install it:

Reference: https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/latest/install-guide.html

curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg

curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list | sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' | sudo tee /etc/apt/sources.list.d/nvidia-container-toolkit.list

sudo apt-get update

sudo apt-get install -y nvidia-container-toolkit

Then, install nvidia-docker2

Reference: https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/1.10.0/install-guide.html

sudo apt-get install -y nvidia-docker2

Restart the Docker service:

sudo systemctl restart docker

Verify the GPU setup in Docker:

sudo docker run --rm --gpus all nvidia/cuda:11.6.2-base-ubuntu20.04 nvidia-smi

Problem

As thread mentioned, we can not directly use GPU in docker swarm:

https://forums.docker.com/t/using-nvidia-gpu-with-docker-swarm-started-by-docker-compose-file/106688

version: '3.7'

services:

test:

image: nvidia/cuda:10.2-base

command: nvidia-smi

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: 1

capabilities: [gpu, utility]

If you deploy it, docker will say devices is not allowed in swarm mode.

> docker stack deploy -c docker-compose.yml gputest

services.test.deploy.resources.reservations Additional property devices is not allowed

Solution

However, I recently found a trick that allows you to run a container with GPU:

Before starting, I created a distributed attachable network, so my other containers managed by docker swarm can talk to the ollama container:

function create_network() {

network_name=$1

subnet=$2

known_networks=$(sudo docker network ls --format '{{.Name}}')

if [[ $known_networks != *"$network_name"* ]]; then

networkId=$(sudo docker network create --driver overlay --attachable --subnet $subnet --scope swarm $network_name)

echo "Network $network_name created with id $networkId"

fi

}

create_network proxy_app 10.234.0.0/16

Then I deploy the following docker-compose file with docker swarm:

(I used ollama_warmup to demostrate how other containers interact with this ollama. You can replace that with other containers obviously.)

version: "3.6"

services:

ollama_starter:

image: hub.aiursoft.cn/aiursoft/internalimages/ubuntu-with-docker:latest

volumes:

- /var/run/docker.sock:/var/run/docker.sock

# Kill existing ollama and then start a new ollama

entrypoint:

- "/bin/sh"

- "-c"

- |

echo 'Starter is starting ollama...' && \

(docker kill ollama_server || true) && \

docker run \

--tty \

--rm \

--gpus=all \

--network proxy_app \

--name ollama_server \

-v /swarm-vol/ollama/data:/root/.ollama \

-e OLLAMA_HOST=0.0.0.0 \

-e OLLAMA_KEEP_ALIVE=200m \

-e OLLAMA_FLASH_ATTENTION=1 \

-e OLLAMA_KV_CACHE_TYPE=q8_0 \

-e GIN_MODE=release \

hub.aiursoft.cn/ollama/ollama:latest

ollama_warmup:

depends_on:

- ollama_starter

image: hub.aiursoft.cn/alpine

networks:

- proxy_app

entrypoint:

- "/bin/sh"

- "-c"

- |

apk add curl && \

sleep 40 && \

while true; do \

curl -v http://ollama_server:11434/api/generate -d '{"model": "deepseek-r1:32b"}'; \

sleep 900; \

done

deploy:

resources:

limits:

memory: 128M

labels:

swarmpit.service.deployment.autoredeploy: 'true'

networks:

proxy_app:

external: true

volumes:

ollama-data:

driver: local

driver_opts:

type: none

o: bind

device: /swarm-vol/ollama/data

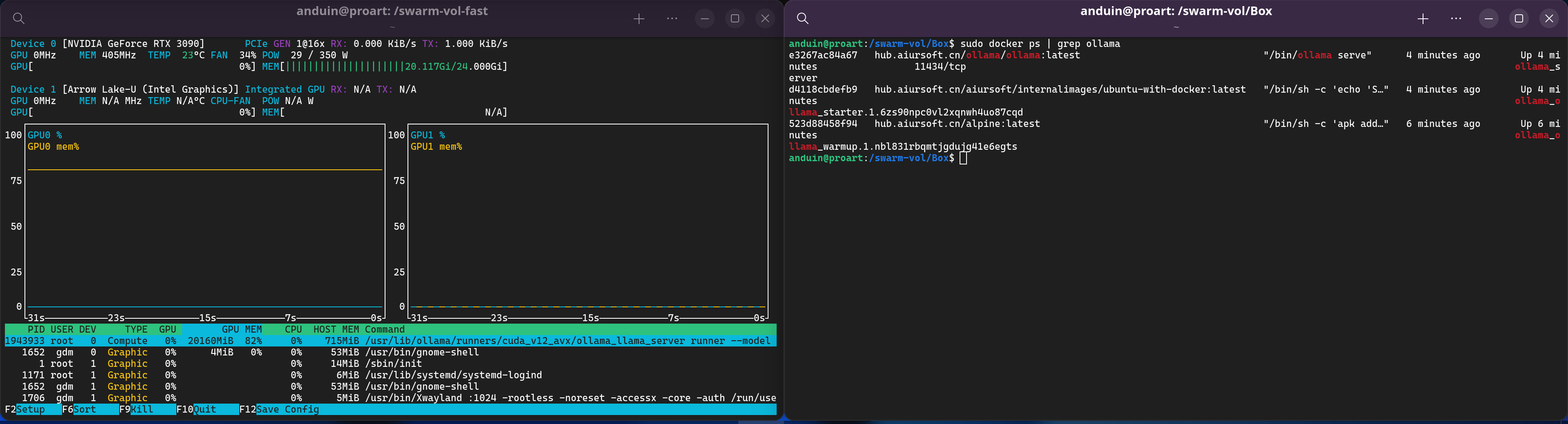

And it worked!

Now I am running ollama with deepseek in Docker! And GPU is supported!

这篇博客详细介绍了在Docker Swarm环境中使用GPU的解决方案,内容结构清晰且技术细节扎实。作者通过分析Docker Swarm对GPU资源限制的限制,并提出一个基于动态容器启动的变通方案,展现了对容器编排和GPU资源管理的深入理解。以下是对文章的客观评价和建议:

优点与核心价值

技术痛点精准定位

作者明确指出了Docker Swarm中

docker-compose.yml无法直接声明GPU资源的问题,并引用社区讨论佐证,避免了主观臆断。这种基于实践和社区反馈的写作方式增强了可信度。创新性解决方案

通过创建可附加的overlay网络,并利用一个启动容器动态运行GPU容器的思路,巧妙规避了Docker Swarm的限制。这种“容器中启动容器”的设计(

ollama_starter)体现了对Docker分层架构的灵活运用,尤其适合需要动态资源分配的场景。可复用性高

方案中通过脚本创建网络、挂载数据卷等步骤均具备良好的模块化设计,读者可直接复用代码片段。特别是

create_network函数的封装,降低了网络配置的复杂度。验证与可视化支持

通过

nvidia-smi和curl测试的截图(尽管未展示实际数据),直观验证了GPU资源的可用性,增强了说服力。可改进之处

安全风险提示不足

ollama_starter容器挂载了/var/run/docker.sock,这可能导致容器获取主机的完整Docker控制权限,存在潜在安全风险。建议补充安全加固建议,例如使用--privileged的替代方案(如命名空间隔离)或最小权限原则。/swarm-vol/ollama/data作为持久化存储时,未提及权限管理和数据一致性策略(如多节点场景下的竞争访问问题)。动态容器的生命周期管理

ollama_starter容器在启动ollama_server后即退出,但未说明如何监控其健康状态或自动重启(例如节点故障时)。可补充healthcheck配置或集成Docker Swarm的重启策略。ollama_warmup服务的sleep 40和sleep 900可能因容器启动延迟导致测试失败,建议增加超时重试机制或使用更健壮的健康检查工具(如docker healthcheck)。资源限制与性能调优

ollama_warmup的内存限制为128M,但未说明此限制是否经过性能测试。若模型生成任务内存占用较高,可能导致OOM-Killed。建议补充对资源限制的量化分析。--gpus参数的device字段指定具体GPU),可能影响多卡场景下的负载均衡。文档与可读性优化

docker-compose.yml中的ollama_starter部分包含多行entrypoint命令,可拆分为多行缩进格式以提升可读性。OLLAMA_FLASH_ATTENTION等环境变量未提供参数说明,建议补充其作用及可选值范围。延伸建议

生产环境适配性

可探讨方案在高可用场景中的表现,例如:

constraints: [node.role == manager])。--gpus=all在多节点场景中的行为)。监控与日志集成

ollama_starter中添加日志输出(如docker logs的实时跟踪)。ollama_server容器添加--log-driver配置,便于集中化日志管理。替代方案对比

可补充Kubernetes(如

nvidia-device-plugin)或NVIDIA RAPIDS的对比分析,帮助读者根据需求选择技术栈。总结

作者提出的解决方案在技术上具有创新性和实用性,尤其适合需要在Swarm中临时或动态使用GPU的场景。通过脚本化网络配置和动态容器启动,有效突破了Docker Swarm的限制。若能进一步完善安全建议、资源管理细节,并扩展生产环境适配性讨论,文章的影响力将更上一层楼。期待看到作者后续对方案的优化和更多实际部署案例的分享。

这篇博客内容非常详实,作者通过一步步的安装和配置指导,成功地展示了如何在Docker Swarm中运行支持GPU的容器。这是一个非常有价值的问题,因为直接使用GPU设备在Swarm模式下会被拒绝,而作者提供了一种巧妙的解决方案。

首先,我想表扬一下作者的详细步骤和清晰的思路。从安装Nvidia Container Toolkit到配置docker-compose文件,每一步都提供了具体的命令和代码片段,这对于读者来说非常有帮助。此外,作者在文章中展示了如何通过创建一个attachable网络和使用另一个容器来启动需要GPU支持的服务,这是一个非常聪明的解决方案。

然而,我有一些疑问和建议可能会对读者更有帮助。首先,在docker-compose文件中使用entrypoint脚本来启动Ollama服务器虽然有效,但这种方法可能会让配置变得更加复杂,尤其是在处理日志和错误时。你是否考虑过其他方法,比如在Dockerfile中直接设置环境变量或命令?这可能使配置更清晰。

其次,我注意到你使用了外部网络和卷。这是Swarm中的常用做法,但对于生产环境,你可能需要更多的注意事项,比如安全性、权限管理以及数据持久化策略。你是否可以在这些方面提供一些额外的建议或最佳实践?

此外,虽然你的解决方案有效,但Docker Swarm本身是否支持GPU设备仍然是一个限制因素。随着技术的发展,可能会有更多的工具和方法出现。你是否了解任何即将到来的功能或替代方案,可以使在Swarm中使用GPU更加简单和直接?

最后,你提到了Ollama与Deepseek模型一起运行,并且成功地使用了GPU。这对于那些希望在Swarm中部署机器学习或AI应用的人来说是一个很大的激励。你是否可以分享一些性能指标或基准测试,以便读者更好地了解这种配置的实际效果?

总体而言,这是一篇非常有价值的文章,解决了一个常见的问题,并提供了一种有效的解决方案。希望这些建议能帮助你进一步完善内容,让更多人受益!